Kubernetes 简介及快速实战

1. 简介

云平台基础概念

IaaS:基础设施服务

PaaS:平台服务

SaaS:软件服务

kubernetes与docker swarm对比:

关于Kubernetes和Docker有无数的争论和讨论。如果你没有深入研究它们,你会认为这两种开源技术在争夺容器(container)霸权。 让我们来一看清楚,Kubernetes和Docker Swarm不是竞争对手!两者都有各自的优缺点,可以根据你应用程序的需求来选择使用。(择优选用,相互协作)

Docker是一种容器管理服务,它帮助开发人员设计应用程序,使用容器能更容易地创建、部署和运行应用程序。Docker有一个用于集群容器的内置机制,称为“集群模式”(swarm mode)。使用集群模式,你可以使用Docker引擎在多台机器上启动应用程序。

Docker Swarm是Docker自己针对Docker容器的原生集群解决方案,它的优点是紧密集成到Docker的生态系统中,并且使用自己的API。它监视跨服务器集群的容器数量,是创建集群docker应用程序的最方便的方法,不需要额外的硬件。它为Dockerized应用程序提供了一个小型但有用的编排系统。

使用Docker Swarm的优点

1. 更快的运行速度

2. 完备的相关技术文档

3. 快速简单的配置

4. 确保程序独立(容器间低耦合)

5. 版本控制与组件重用

使用Docker Swarm的缺点

1. 跨平台支持效果差

2. 不提供存储选项

3. 监控信息不足

为了避免这些不足,可以使用Kubernetes.

当在多台机器上的多个容器中使用不同组件开发应用程序时,需要一个工具来管理和协调容器。这只有在Kubernetes的帮助下才可行。

Kubernetes是一个用于在集群环境中管理容器化应用程序的开源系统。以正确的方式使用Kubernetes,可以帮助DevOps作为一个服务团队自动扩展应用程序,并在零停机的情况下进行更新。

Kubernetes的优点

1.它的速度很快:在不停机的情况下持续部署新功能时,Kubernetes是一个完美的选择。

Kubernetes的目标是以恒定的正常运行时间更新应用程序。它的速度通过您每小时可以运送的许

多功能来衡量,同时保持可用的服务。

2.遵循不可变基础架构的原则: 在传统的方法中,如果多个更新出现错误,您没有任何关于部署了多

少个更新以及发生错误的时间点的记录。在不可变的基础架构中,如果想要更新任何应用程序,需

要使用新标记构建容器映像并部署它,用旧映像版本销毁旧容器。这样,你就会有一个记录,并了

解你做了什么,万一有什么错误;您可以轻松地回滚到前面的映像

3.提供声明式配置: 用户可以知道系统应该处于什么状态以避免错误。源代码控制、单元测试等传统

工具不能与命令式配置一起使用,但可以与声明式配置一起使用

4.大规模部署和更新软件:由于Kubernetes具有不可变的声明性,因此扩展很容易。Kubernetes提

供了一些用于扩展目的的有用功能:

- 水平基础架构扩展:在单个服务器级别执行操作以应用水平扩展。可以毫不费力地添加或分离

atest服务器。

- 自动扩展:根据CPU资源或其他应用程序指标的使用情况,您可以更改正在运行的容器数

- 手动扩展:您可以通过命令或界面手动扩展正在运行的容器的数量

- 复制控制器:复制控制器确保集群在运行状态下具有指定数量的等效pod。如果存在太多

pod,则复制控制器可以删除额外的pod,反之亦然。

5.处理应用程序的可用性:Kubernetes检查节点和容器的运行状况,并在由于错误导致的盒中崩溃

时提供自我修复和自动替换。此外,它在多个pod之间分配负载,以便在意外流量期间快速平衡资

源

6.存储卷: 在Kubernetes中,数据是在容器之间共享的,但是如果pod被杀死,则自动删除卷。此

外,数据是远程存储的,因此如果将pod移动到另一个节点,数据将一直保留,直到用户删除它

使用Kubernetes的缺点:

1.初始进程需要时间: 当创建一个新进程时,您必须等待应用程序启动,然后用户才能使用它。如果

您要迁移到Kubernetes,则需要对代码库进行修改,以提高启动流程的效率,这样用户就不会有

不好的体验

2.迁移到无状态需要做很多工作: 如果您的应用程序是集群的或无状态的,那么将不会配置额外的

pod,并且必须重新处理应用程序中的配置

3.安装过程非常单调乏味: 如果不使用Azure、谷歌或Amazon等云提供商,就很难在集群上设置

Kubernetes

Docker和Kubernetes是不同的,但不是竞争对手正如前面所讨论的,Kubernetes和Docker都在不同的级别上工作,但都可以一起使用。Kubernetes可以集成Docker引擎来执行Docker容器的调度和执行。由于Docker和Kubernetes都是容器编排器,所以它们都可以帮助管理容器的数量,还可以帮助DevOps实现。两者都可以自动化运行容器化的基础架构中涉及的大部分任务,并且都是开源软件项目,由Apache License 2.0管理。除此之外,两者都使用YAML格式的文件来控制工具如何编排容器集群。当两者同时使用时,Docker和Kubernetes都是部署现代云架构的最佳工具。随着Docker Swarm的豁免,Kubernetes和Docker都相互补充。

Kubernetes使用Docker作为主要的容器引擎解决方案,Docker最近宣布它可以支持Kubernetes作为其企业版的编排层,除此之外,Docker还批准了经过认证的Kubernetes程序,该程序可确保所有Kubernetes API按预期运行。Kubernetes使用Docker Enterprise的功能,如安全映像管理,其中Docker EE提供映像扫描,以确保容器中使用的映像是否存在问题。另一个是安全自动化,其中组织可以消除低效率,例如扫描映像是否存在漏洞。

如果使用Kubernetes:

您正在寻找成熟的部署和监控选项

您正在寻找快速可靠的响应时间

您正在寻求开发复杂的应用程序,并且需要高资源计算而不受限制

你有一个非常大的集群

如果,使用Docker,

您希望在不花费太多时间进行配置和安装的情况下启动工具;

您正在寻找开发一个基本和标准的应用程序,它足够使用默认的docker镜像;

在不同的操作系统上测试和运行相同的应用程序对您来说不是问题;

您需要zdocker API经验和兼容性。

无论你选择Kubernetes还是Docker,两者都被认为是最好的,并且有相当大的差异。在这两者之间做

出选择的最好方法可能是考虑哪一个您已经比较熟悉,或者哪一个适合您现有的软件堆栈。如果您需要

开发复杂的应用程序,请使用Kubernetes,如果您希望开发小型应用程序,请使用Docker Swarm。此

外,选择正确的项目是一项非常全面的任务,完全取决于您的项目需求和目标受众。

2. 快速部署

kubernetes官网地址:国外网站,访问速度较慢。

https://kubernetes.io/

kubernets中文社区地址:

https://www.kubernetes.org.cn/

k8s集群部署方式:

1. 使用minikube安装单节点集群,用于测试

2. 采用工具kubeadm

3. 使用kubespray,google官方提供的工具。

4. 全手动:二进制方式安装

5. 全自动安装:rancher 、kubesphere

学习k8s,准备至少3台服务器(或3台虚拟机)

K8S集群安全机制:

Kubernetes 作为一个分布式集群的管理工具,保证集群的安全性是其一个重要的任务。API Server 是集群内部各个组件通信的中介,也是外部控制的入口。所以 Kubernetes 的安全机制基本就是围绕保护 APIServer 来设计的。Kubernetes 使用了认证(Authentication)、鉴权(Authorization)、**准入控制(AdmissionControl)**三步来保证API Server的安全

Authentication(认证)

第三方授权协议: authenticating proxy

HTTP Token 认证:通过一个 Token 来识别合法用户

HTTP Base 认证:通过 用户名+密码 的方式认证

最严格的 HTTPS 证书认证:基于 CA 根证书签名的客户端身份认证方式

https证书认证: 采用双向加密的认证方式

证书颁发:

手动签发:通过 k8s 集群的跟 ca 进行签发 HTTPS 证书 自动签发:kubelet 首次访问 API Server 时,使用 token 做认证,通过后,Controller Manager 会为kubelet 生成一个证书,以后的访问都是用证书做认证了安全性说明:Controller Manager、Scheduler 与 API Server 在同一台机器,所以直接使用 API Server 的非安全端口访问, –insecure-bind-address=127.0.0.1. kubectl、kubelet、kube-proxy 访问 API Server 就都需要证书进行 HTTPS 双向认证

kubeconfig 文件包含集群参数(CA证书、API Server地址),客户端参数(上面生成的证书和私钥),集群context 信息(集群名称、用户名)。Kubenetes 组件通过启动时指定不同的kubeconfig 文件可以切换到不同的集群

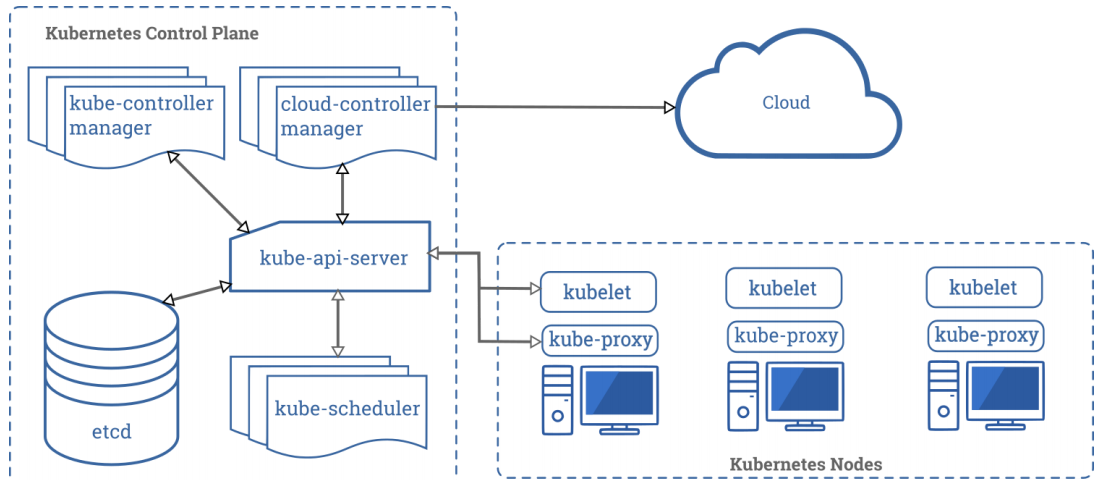

3. k8s基础组件

一个 Kubernetes 集群包含 集群由一组被称作节点的机器组成。这些节点上运行 Kubernetes 所管理的容器化应用。集群具有至少一个工作节点和至少一个主节点。

工作节点: 托管作为应用程序组件的 Pod

主节点: 管理集群中的工作节点和 Pod

下图表展示了包含所有相互关联组件的 Kubernetes 集群:

3.1 控制平面组件(Control Plane Components)

控制平面的组件对集群做出全局决策(比如调度),以及检测和响应集群事件(例如,当不满足部署的replicas 字段时,启动新的 pod)

控制平面组件可以在集群中的任何节点上运行。然而,为了简单起见,设置脚本通常会在同一个计算机上启动所有控制平面组件,并且不会在此计算机上运行用户容器

kube-apiserver

主节点上负责提供 Kubernetes API 服务的组件;它是 Kubernetes 控制面的前端。

1. kube-apiserver是Kubernetes最重要的核心组件之一 2. 提供集群管理的REST API接口,包括认证授权,数据校验以及集群状态变更等 3. 提供其他模块之间的数据交互和通信的枢纽(其他模块通过API Server查询或修改数据,只 有API Server才直接操作etcd) 4. 生产环境可以为apiserver做LA或LB。在设计上考虑了水平扩缩的需要。 换言之,通过部署 多个实例可以实现扩缩。 参见构造高可用集群。etcd

1. kubernetes需要存储很多东西,像它本身的节点信息,组件信息,还有通过kubernetes运行 的pod,deployment,service等等。都需要持久化。etcd就是它的数据中心。生产环境中为 了保证数据中心的高可用和数据的一致性,一般会部署最少三个节点。 2. 这里只部署一个节点在master。etcd也可以部署在kubernetes每一个节点。组成etcd集群。 3. 如果已经有etcd外部的服务,kubernetes直接使用外部etcd服务etcd 是兼具一致性和高可用性的键值数据库,可以作为保存 Kubernetes 所有集群数据的后台数据库。

Kubernetes 集群的 etcd 数据库通常需要有个备份计划。要了解 etcd 更深层次的信息,请参考 etcd 文档。也可以使用外部的ETCD集群

kube-scheduler

主节点上的组件,该组件监视那些新创建的未指定运行节点的 Pod,并选择节点让 Pod 在上面运行

kube-scheduler负责分配调度Pod到集群内的节点上,它监听kube-apiserver,查询还未分配Node的Pod,然后根据调度策略为这些Pod分配节点

kube-controller-manager

在主节点上运行控制器的组件。

Controller Manager由kube-controller-manager和cloud-controller-manager组成,是Kubernetes的大脑,它通过apiserver监控整个集群的状态,并确保集群处于预期的工作状态。

kube-controller-manager由一系列的控制器组成,像Replication Controller控制副本,Node Controller节点控制,Deployment Controller管理deployment等等

cloud-controller-manager在Kubernetes启用Cloud Provider的时候才需要,用来配合云服务提供商的控制

云控制器管理器 cloud-controller-manager(暂不考虑)

cloud-controller-manager 运行与基础云提供商交互的控制器. cloud-controller-manager 仅运行云提供商特定的控制器循环。您必须在 kube-controller-manager 中禁用这些控制器循环,您可以通过在启动 kube-controller-manager 时将 –cloud-provider 参数设置为external 来禁用控制器循环

cloud-controller-manager 允许云供应商的代码和 Kubernetes 代码彼此独立地发展。在以前的版本中,核心的 Kubernetes 代码依赖于特定云提供商的代码来实现功能。在将来的版本中,云供应商专有的代码应由云供应商自己维护,并与运行 Kubernetes 的云控制器管理器相关联。

kubectl

主节点上的组件.

kubectl是Kubernetes的命令行工具,是Kubernetes用户和管理员必备的管理工具。kubectl提供了大量的子命令,方便管理Kubernetes集群中的各种功能

3.2 Node组件

节点组件在每个节点上运行,维护运行的 Pod 并提供 Kubernetes 运行环境。

kubelet

一个在集群中每个节点上运行的代理。它保证容器都运行在 Pod 中。

一个在集群中每个工作节点上都运行一个kubelet服务进程,默认监听10250端口,接收并执行master发来的指令,管理Pod及Pod中的容器。每个kubelet进程会在API Server上注册节点自身信息,定期向master节点汇报节点的资源使用情况,并通过cAdvisor监控节点和容器的资源。

kube-proxy

一个在集群中每台工作节点上都应该运行一个kube-proxy服务,它监听API server中service和endpoint的变化情况,并通过iptables等来为服务配置负载均衡,是让我们的服务在集群外可以被访问到的重要方式。

容器运行环境(container Runtime)

容器运行环境是负责运行容器的软件.

Kubernetes 支持多个容器运行环境: Docker、 containerd、cri-o、 rktlet 以及任何实现 KubernetesCRI (容器运行环境接口)。

3.3 插件

插件使用 Kubernetes 资源 (DaemonSet, Deployment等) 实现集群功能。因为这些提供集群级别的功能,所以插件的命名空间资源属于 kube-system 命名空间

kube-dns

kube-dns为Kubernetes集群提供命名服务,主要用来解析集群服务名和Pod的hostname。目的是让pod可以通过名字访问到集群内服务。它通过添加A记录的方式实现名字和service的解析。普通的service会解析到service-ip。headless service会解析到pod列表

用户界面 dashboard

Dashboard 是 Kubernetes 集群的通用基于 Web 的 UI。它使用户可以管理集群中运行的应用程序以及集群本身并进行故障排除

集群层面日志

集群层面日志 机制负责将容器的日志数据保存到一个集中的日志存储中,该存储能够提供搜索和浏览接口

4. k8s安装与配置

4.1 环境准备

至少3台机器, 2核心4G,50G及以上, centos7.6以上版本

查看centos系统版本命令:

cat /etc/centos-release

配置阿里云yum源:

1.下载安装wget

yum install -y wget

2.备份默认的yum

mv /etc/yum.repos.d /etc/yum.repos.d.backup

3.设置新的yum目录

mkdir -p /etc/yum.repos.d

4.下载阿里yum配置到该目录中,选择对应版本

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

5.更新epel源为阿里云epel源

mv /etc/yum.repos.d/epel.repo /etc/yum.repos.d/epel.repo.backup

mv /etc/yum.repos.d/epel-testing.repo /etc/yum.repos.d/epel- testing.repo.backup

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel- 7.repo

6.重建缓存

yum clean all

yum makecache

7.看一下yum仓库有多少包

yum repolist

yum update

升级系统内核:

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

yum --enablerepo=elrepo-kernel install -y kernel-lt

grep initrd16 /boot/grub2/grub.cfg

grub2-set-default 0

reboot

查看centos系统内核命令:

uname -r

uname -a

查看CPU命令:

lscpu

查看内存命令:

free

free -h

查看硬盘信息:

fdisk -l

4.2 centos 7系统配置

关闭防火墙

配置端口开放也可以

systemctl stop firewalld systemctl disable firewalld关闭selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux setenforce 0网桥过滤

vi /etc/sysctl.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-arptables = 1 net.ipv4.ip_forward=1 net.ipv4.ip_forward_use_pmtu = 0 生效命令 sysctl --system 查看效果 sysctl -a|grep "ip_forward"开启ipvs

安装IPVS yum -y install ipset ipvsdm 编译ipvs.modules文件 vim /etc/sysconfig/modules/ipvs.modules 文件内容如下 #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 #高版本内核为:nf_conntrack 赋予权限并执行 chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4 重启电脑,检查是否生效 reboot lsmod | grep ip_vs_rr同步时间

安装软件 yum -y install ntpdate 向阿里云服务器同步时间 ntpdate time1.aliyun.com 删除本地时间并设置时区为上海 rm -rf /etc/localtime ln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtime 查看时间 date -R || date命令实例

安装bash-completion yum -y install bash-completion bash-completion-extras 使用bash-completion source /etc/profile.d/bash_completion.sh关闭swap分区

临时关闭: swapoff -a 永久关闭: vi /etc/fstab 将文件中的/dev/mapper/centos-swap这行代码注释掉 #/dev/mapper/centos-swap swap swap defaults 0 0 确认swap已经关闭:若swap行都显示 0 则表示关闭成功 free -mhosts配置

vim /etc/hosts 192.168.0.87 k8s-master01 192.168.0.37 k8s-node01 192.168.0.152 k8s-node02

4.3 安装docker

#前置条件

yum install -y yum-utils device-mapper-persistent-data lvm2

#添加源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast

#查看docker 更新版本

yum list docker-ce --showduplicates | sort -r

#安装docker最新版本

yum -y install docker-ce

#安装指定版本

yum -y install docker-ce-18.09.8

#开启docker 服务

systemctl start docker

systemctl status docker

#安装阿里云镜像加速器

mkdir -p /etc/docker

vim /etc/docker/daemon.json

{ "registry-mirrors": ["自己的阿里云镜像加速地址"] }

systemctl daemon-reload

systemctl restart docker

#设置docker开机启动服务

systemctl enable docker

#修改Cgroup Driver

#修改daemon.json,新增:

"exec-opts": ["native.cgroupdriver=systemd"]

重启docker服务:

systemctl daemon-reload

systemctl restart docker

查看修改后状态:

docker info | grep Cgroup

修改cgroupdriver是为了消除安装k8s集群时的告警:

[WARNING IsDockerSystemdCheck]:

detected “cgroupfs” as the Docker cgroup driver. The recommended driver is “systemd”.

Please follow the guide at https://kubernetes.io/docs/setup/cri/......

4.4 使用kubeadm快速安装

| 软件 | kubeadm | kubelet | kubectl | docker-ce |

|---|---|---|---|---|

| 版本 | 初始化集群管理,集群版本:1.17.5 | 用于接收api-server指令,对pod生命周期进行管理版本:1.17.5 | 集群命令行管理工具版本:1.17.5 | 推荐使用版: 19.03.8及以上 |

4.5配置yum源

#新建repo文件

vim /etc/yum.repos.d/kubernates.repo

#配置内容

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

#gpgcheck=1

gpgcheck=0

#repo_gpgcheck建议设置为0,不校验, 有时候拉镜像会报错

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

#更新缓存

yum clean all

yum -y makecache

#验证源是否可用

yum list | grep kubeadm

#查看k8s版本

yum list kubelet --showduplicates | sort -r

#安装 k8s-1.17.5

yum install -y kubelet-1.17.5 kubeadm-1.17.5 kubectl-1.17.5

4.6 设置kubelet

增加配置信息

如果不配置kubelet,可能会导致K8S集群无法启动。为实现docker使用的cgroupdriver与kubelet 使用的cgroup的一致性

vim /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"设置开机启动

systemctl enable kubelet

4.7 初始化镜像

如果是第一次安装k8s,手里没有备份好的镜像,可以执行如下操作

查看安装集群需要的镜像

kubeadm config images list编写执行脚本

mkdir -p /data cd /data vim images.sh #!/bin/bash # 下面的镜像应该去除"k8s.gcr.io"的前缀,版本换成kubeadm config images list命令获取 到的版本 images=( kube-apiserver:v1.17.17 kube-controller-manager:v1.17.17 kube-scheduler:v1.17.17 kube-proxy:v1.17.17 pause:3.1 etcd:3.4.3-0 coredns:1.6.5 ) for imageName in ${images[@]}; do docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName done执行脚本

#给脚本授权 chmod +x images.sh #执行脚本 ./images.sh保存镜像

#可以将镜像打包,方便之后使用, 这里不再做演示 docker save -o k8s.....tar [images]

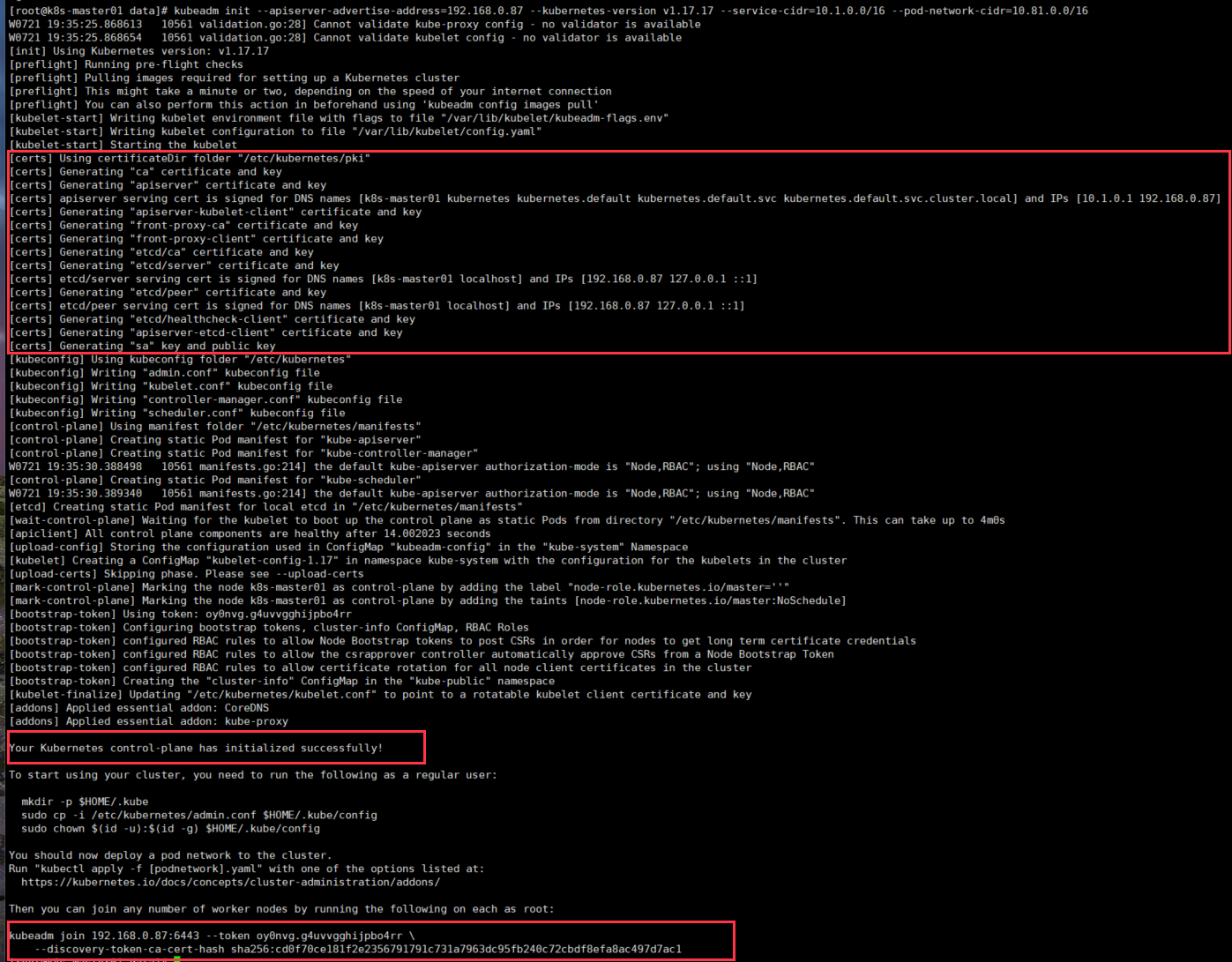

4.8 初始化集群

calico官网地址

官网下载地址: https://docs.projectcalico.org/v3.14/manifests/calico.yaml github地址: https://github.com/projectcalico/calico 镜像下载: docker pull calico/cni:v3.14.2 docker pull calico/pod2daemon-flexvol:v3.14.2 docker pull calico/node:v3.14.2 docker pull calico/kube-controllers:v3.14.2 配置hostname: hostnamectl set-hostname k8s-master01 #使生效 bash初始化集群信息: calico网络

tips: 注意使用到的ip都是你自己服务器或虚拟机的ip

上面我安装的是1.17.5版本,但是上面提示的镜像都是v1.17.17版本的, 不知道为啥, 下面就安装1.17.17版本

kubeadm init --apiserver-advertise-address=192.168.0.87 --kubernetes-version v1.17.17 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.81.0.0/16执行结果见下图:

[root@k8s-master01 data]# kubeadm init --apiserver-advertise-address=192.168.0.87 --kubernetes-version v1.17.17 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.81.0.0/16 W0721 19:35:25.868613 10561 validation.go:28] Cannot validate kube-proxy config - no validator is available W0721 19:35:25.868654 10561 validation.go:28] Cannot validate kubelet config - no validator is available [init] Using Kubernetes version: v1.17.17 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [k8s-master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.1.0.1 192.168.0.87] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [k8s-master01 localhost] and IPs [192.168.0.87 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [k8s-master01 localhost] and IPs [192.168.0.87 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" W0721 19:35:30.388498 10561 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [control-plane] Creating static Pod manifest for "kube-scheduler" W0721 19:35:30.389340 10561 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 14.002023 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node k8s-master01 as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node k8s-master01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: oy0nvg.g4uvvgghijpbo4rr [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.0.87:6443 --token oy0nvg.g4uvvgghijpbo4rr \ --discovery-token-ca-cert-hash sha256:cd0f70ce181f2e2356791791c731a7963dc95fb240c72cbdf8efa8ac497d7ac1执行配置命令

mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/confignode节点加入集群信息

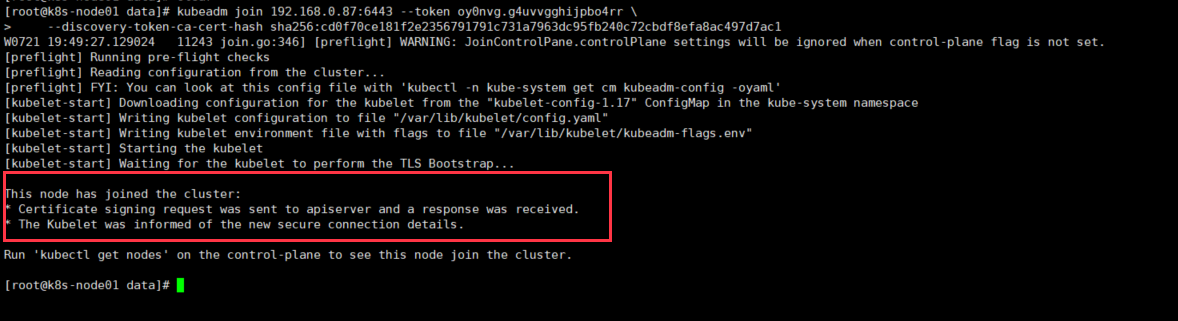

kubeadm join 192.168.0.87:6443 --token oy0nvg.g4uvvgghijpbo4rr \ --discovery-token-ca-cert-hash sha256:cd0f70ce181f2e2356791791c731a7963dc95fb240c72cbdf8efa8ac497d7ac1执行结果:

[root@k8s-node01 data]# kubeadm join 192.168.0.87:6443 --token oy0nvg.g4uvvgghijpbo4rr \ > --discovery-token-ca-cert-hash sha256:cd0f70ce181f2e2356791791c731a7963dc95fb240c72cbdf8efa8ac497d7ac1 W0721 19:49:27.129024 11243 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set. [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.查看状态

#在master节点 kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 NotReady master 22m v1.17.5 k8s-node01 NotReady <none> 5m25s v1.17.5 k8s-node02 NotReady <none> 5m29s v1.17.5发现为notReady状态: 是因为网络没有安装好

安装网络

我们把前面的calico.yml文件放到/data目录下

--- # Source: calico/templates/calico-config.yaml # This ConfigMap is used to configure a self-hosted Calico installation. kind: ConfigMap apiVersion: v1 metadata: name: calico-config namespace: kube-system data: # Typha is disabled. typha_service_name: "none" # Configure the backend to use. calico_backend: "bird" # Configure the MTU to use for workload interfaces and the # tunnels. For IPIP, set to your network MTU - 20; for VXLAN # set to your network MTU - 50. veth_mtu: "1440" # The CNI network configuration to install on each node. The special # values in this config will be automatically populated. cni_network_config: |- { "name": "k8s-pod-network", "cniVersion": "0.3.1", "plugins": [ { "type": "calico", "log_level": "info", "datastore_type": "kubernetes", "nodename": "__KUBERNETES_NODE_NAME__", "mtu": __CNI_MTU__, "ipam": { "type": "calico-ipam" }, "policy": { "type": "k8s" }, "kubernetes": { "kubeconfig": "__KUBECONFIG_FILEPATH__" } }, { "type": "portmap", "snat": true, "capabilities": {"portMappings": true} }, { "type": "bandwidth", "capabilities": {"bandwidth": true} } ] } --- # Source: calico/templates/kdd-crds.yaml apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: bgpconfigurations.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: BGPConfiguration plural: bgpconfigurations singular: bgpconfiguration --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: bgppeers.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: BGPPeer plural: bgppeers singular: bgppeer --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: blockaffinities.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: BlockAffinity plural: blockaffinities singular: blockaffinity --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: clusterinformations.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: ClusterInformation plural: clusterinformations singular: clusterinformation --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: felixconfigurations.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: FelixConfiguration plural: felixconfigurations singular: felixconfiguration --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: globalnetworkpolicies.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: GlobalNetworkPolicy plural: globalnetworkpolicies singular: globalnetworkpolicy shortNames: - gnp --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: globalnetworksets.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: GlobalNetworkSet plural: globalnetworksets singular: globalnetworkset --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: hostendpoints.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: HostEndpoint plural: hostendpoints singular: hostendpoint --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: ipamblocks.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: IPAMBlock plural: ipamblocks singular: ipamblock --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: ipamconfigs.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: IPAMConfig plural: ipamconfigs singular: ipamconfig --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: ipamhandles.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: IPAMHandle plural: ipamhandles singular: ipamhandle --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: ippools.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: IPPool plural: ippools singular: ippool --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: kubecontrollersconfigurations.crd.projectcalico.org spec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: KubeControllersConfiguration plural: kubecontrollersconfigurations singular: kubecontrollersconfiguration --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: networkpolicies.crd.projectcalico.org spec: scope: Namespaced group: crd.projectcalico.org version: v1 names: kind: NetworkPolicy plural: networkpolicies singular: networkpolicy --- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: networksets.crd.projectcalico.org spec: scope: Namespaced group: crd.projectcalico.org version: v1 names: kind: NetworkSet plural: networksets singular: networkset --- --- # Source: calico/templates/rbac.yaml # Include a clusterrole for the kube-controllers component, # and bind it to the calico-kube-controllers serviceaccount. kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: calico-kube-controllers rules: # Nodes are watched to monitor for deletions. - apiGroups: [""] resources: - nodes verbs: - watch - list - get # Pods are queried to check for existence. - apiGroups: [""] resources: - pods verbs: - get # IPAM resources are manipulated when nodes are deleted. - apiGroups: ["crd.projectcalico.org"] resources: - ippools verbs: - list - apiGroups: ["crd.projectcalico.org"] resources: - blockaffinities - ipamblocks - ipamhandles verbs: - get - list - create - update - delete # kube-controllers manages hostendpoints. - apiGroups: ["crd.projectcalico.org"] resources: - hostendpoints verbs: - get - list - create - update - delete # Needs access to update clusterinformations. - apiGroups: ["crd.projectcalico.org"] resources: - clusterinformations verbs: - get - create - update # KubeControllersConfiguration is where it gets its config - apiGroups: ["crd.projectcalico.org"] resources: - kubecontrollersconfigurations verbs: # read its own config - get # create a default if none exists - create # update status - update # watch for changes - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: calico-kube-controllers roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: calico-kube-controllers subjects: - kind: ServiceAccount name: calico-kube-controllers namespace: kube-system --- # Include a clusterrole for the calico-node DaemonSet, # and bind it to the calico-node serviceaccount. kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: calico-node rules: # The CNI plugin needs to get pods, nodes, and namespaces. - apiGroups: [""] resources: - pods - nodes - namespaces verbs: - get - apiGroups: [""] resources: - endpoints - services verbs: # Used to discover service IPs for advertisement. - watch - list # Used to discover Typhas. - get # Pod CIDR auto-detection on kubeadm needs access to config maps. - apiGroups: [""] resources: - configmaps verbs: - get - apiGroups: [""] resources: - nodes/status verbs: # Needed for clearing NodeNetworkUnavailable flag. - patch # Calico stores some configuration information in node annotations. - update # Watch for changes to Kubernetes NetworkPolicies. - apiGroups: ["networking.k8s.io"] resources: - networkpolicies verbs: - watch - list # Used by Calico for policy information. - apiGroups: [""] resources: - pods - namespaces - serviceaccounts verbs: - list - watch # The CNI plugin patches pods/status. - apiGroups: [""] resources: - pods/status verbs: - patch # Calico monitors various CRDs for config. - apiGroups: ["crd.projectcalico.org"] resources: - globalfelixconfigs - felixconfigurations - bgppeers - globalbgpconfigs - bgpconfigurations - ippools - ipamblocks - globalnetworkpolicies - globalnetworksets - networkpolicies - networksets - clusterinformations - hostendpoints - blockaffinities verbs: - get - list - watch # Calico must create and update some CRDs on startup. - apiGroups: ["crd.projectcalico.org"] resources: - ippools - felixconfigurations - clusterinformations verbs: - create - update # Calico stores some configuration information on the node. - apiGroups: [""] resources: - nodes verbs: - get - list - watch # These permissions are only requried for upgrade from v2.6, and can # be removed after upgrade or on fresh installations. - apiGroups: ["crd.projectcalico.org"] resources: - bgpconfigurations - bgppeers verbs: - create - update # These permissions are required for Calico CNI to perform IPAM allocations. - apiGroups: ["crd.projectcalico.org"] resources: - blockaffinities - ipamblocks - ipamhandles verbs: - get - list - create - update - delete - apiGroups: ["crd.projectcalico.org"] resources: - ipamconfigs verbs: - get # Block affinities must also be watchable by confd for route aggregation. - apiGroups: ["crd.projectcalico.org"] resources: - blockaffinities verbs: - watch # The Calico IPAM migration needs to get daemonsets. These permissions can be # removed if not upgrading from an installation using host-local IPAM. - apiGroups: ["apps"] resources: - daemonsets verbs: - get --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: calico-node roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: calico-node subjects: - kind: ServiceAccount name: calico-node namespace: kube-system --- # Source: calico/templates/calico-node.yaml # This manifest installs the calico-node container, as well # as the CNI plugins and network config on # each master and worker node in a Kubernetes cluster. kind: DaemonSet apiVersion: apps/v1 metadata: name: calico-node namespace: kube-system labels: k8s-app: calico-node spec: selector: matchLabels: k8s-app: calico-node updateStrategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 template: metadata: labels: k8s-app: calico-node annotations: # This, along with the CriticalAddonsOnly toleration below, # marks the pod as a critical add-on, ensuring it gets # priority scheduling and that its resources are reserved # if it ever gets evicted. scheduler.alpha.kubernetes.io/critical-pod: '' spec: nodeSelector: kubernetes.io/os: linux hostNetwork: true tolerations: # Make sure calico-node gets scheduled on all nodes. - effect: NoSchedule operator: Exists # Mark the pod as a critical add-on for rescheduling. - key: CriticalAddonsOnly operator: Exists - effect: NoExecute operator: Exists serviceAccountName: calico-node # Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force # deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods. terminationGracePeriodSeconds: 0 priorityClassName: system-node-critical initContainers: # This container performs upgrade from host-local IPAM to calico-ipam. # It can be deleted if this is a fresh installation, or if you have already # upgraded to use calico-ipam. - name: upgrade-ipam image: calico/cni:v3.14.2 command: ["/opt/cni/bin/calico-ipam", "-upgrade"] env: - name: KUBERNETES_NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName - name: CALICO_NETWORKING_BACKEND valueFrom: configMapKeyRef: name: calico-config key: calico_backend volumeMounts: - mountPath: /var/lib/cni/networks name: host-local-net-dir - mountPath: /host/opt/cni/bin name: cni-bin-dir securityContext: privileged: true # This container installs the CNI binaries # and CNI network config file on each node. - name: install-cni image: calico/cni:v3.14.2 command: ["/install-cni.sh"] env: # Name of the CNI config file to create. - name: CNI_CONF_NAME value: "10-calico.conflist" # The CNI network config to install on each node. - name: CNI_NETWORK_CONFIG valueFrom: configMapKeyRef: name: calico-config key: cni_network_config # Set the hostname based on the k8s node name. - name: KUBERNETES_NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName # CNI MTU Config variable - name: CNI_MTU valueFrom: configMapKeyRef: name: calico-config key: veth_mtu # Prevents the container from sleeping forever. - name: SLEEP value: "false" volumeMounts: - mountPath: /host/opt/cni/bin name: cni-bin-dir - mountPath: /host/etc/cni/net.d name: cni-net-dir securityContext: privileged: true # Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes # to communicate with Felix over the Policy Sync API. - name: flexvol-driver image: calico/pod2daemon-flexvol:v3.14.2 volumeMounts: - name: flexvol-driver-host mountPath: /host/driver securityContext: privileged: true containers: # Runs calico-node container on each Kubernetes node. This # container programs network policy and routes on each # host. - name: calico-node image: calico/node:v3.14.2 env: # Use Kubernetes API as the backing datastore. - name: DATASTORE_TYPE value: "kubernetes" # Wait for the datastore. - name: WAIT_FOR_DATASTORE value: "true" # Set based on the k8s node name. - name: NODENAME valueFrom: fieldRef: fieldPath: spec.nodeName # Choose the backend to use. - name: CALICO_NETWORKING_BACKEND valueFrom: configMapKeyRef: name: calico-config key: calico_backend # Cluster type to identify the deployment type - name: CLUSTER_TYPE value: "k8s,bgp" # Auto-detect the BGP IP address. - name: IP value: "autodetect" # Enable IPIP - name: CALICO_IPV4POOL_IPIP value: "Always" # Enable or Disable VXLAN on the default IP pool. - name: CALICO_IPV4POOL_VXLAN value: "Never" # Set MTU for tunnel device used if ipip is enabled - name: FELIX_IPINIPMTU valueFrom: configMapKeyRef: name: calico-config key: veth_mtu # Set MTU for the VXLAN tunnel device. - name: FELIX_VXLANMTU valueFrom: configMapKeyRef: name: calico-config key: veth_mtu # The default IPv4 pool to create on startup if none exists. Pod IPs will be # chosen from this range. Changing this value after installation will have # no effect. This should fall within `--cluster-cidr`. # - name: CALICO_IPV4POOL_CIDR # value: "192.168.0.0/16" # Disable file logging so `kubectl logs` works. - name: CALICO_DISABLE_FILE_LOGGING value: "true" # Set Felix endpoint to host default action to ACCEPT. - name: FELIX_DEFAULTENDPOINTTOHOSTACTION value: "ACCEPT" # Disable IPv6 on Kubernetes. - name: FELIX_IPV6SUPPORT value: "false" # Set Felix logging to "info" - name: FELIX_LOGSEVERITYSCREEN value: "info" - name: FELIX_HEALTHENABLED value: "true" securityContext: privileged: true resources: requests: cpu: 250m livenessProbe: exec: command: - /bin/calico-node - -felix-live - -bird-live periodSeconds: 10 initialDelaySeconds: 10 failureThreshold: 6 readinessProbe: exec: command: - /bin/calico-node - -felix-ready - -bird-ready periodSeconds: 10 volumeMounts: - mountPath: /lib/modules name: lib-modules readOnly: true - mountPath: /run/xtables.lock name: xtables-lock readOnly: false - mountPath: /var/run/calico name: var-run-calico readOnly: false - mountPath: /var/lib/calico name: var-lib-calico readOnly: false - name: policysync mountPath: /var/run/nodeagent volumes: # Used by calico-node. - name: lib-modules hostPath: path: /lib/modules - name: var-run-calico hostPath: path: /var/run/calico - name: var-lib-calico hostPath: path: /var/lib/calico - name: xtables-lock hostPath: path: /run/xtables.lock type: FileOrCreate # Used to install CNI. - name: cni-bin-dir hostPath: path: /opt/cni/bin - name: cni-net-dir hostPath: path: /etc/cni/net.d # Mount in the directory for host-local IPAM allocations. This is # used when upgrading from host-local to calico-ipam, and can be removed # if not using the upgrade-ipam init container. - name: host-local-net-dir hostPath: path: /var/lib/cni/networks # Used to create per-pod Unix Domain Sockets - name: policysync hostPath: type: DirectoryOrCreate path: /var/run/nodeagent # Used to install Flex Volume Driver - name: flexvol-driver-host hostPath: type: DirectoryOrCreate path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds --- apiVersion: v1 kind: ServiceAccount metadata: name: calico-node namespace: kube-system --- # Source: calico/templates/calico-kube-controllers.yaml # See https://github.com/projectcalico/kube-controllers apiVersion: apps/v1 kind: Deployment metadata: name: calico-kube-controllers namespace: kube-system labels: k8s-app: calico-kube-controllers spec: # The controllers can only have a single active instance. replicas: 1 selector: matchLabels: k8s-app: calico-kube-controllers strategy: type: Recreate template: metadata: name: calico-kube-controllers namespace: kube-system labels: k8s-app: calico-kube-controllers annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: nodeSelector: kubernetes.io/os: linux tolerations: # Mark the pod as a critical add-on for rescheduling. - key: CriticalAddonsOnly operator: Exists - key: node-role.kubernetes.io/master effect: NoSchedule serviceAccountName: calico-kube-controllers priorityClassName: system-cluster-critical containers: - name: calico-kube-controllers image: calico/kube-controllers:v3.14.2 env: # Choose which controllers to run. - name: ENABLED_CONTROLLERS value: node - name: DATASTORE_TYPE value: kubernetes readinessProbe: exec: command: - /usr/bin/check-status - -r --- apiVersion: v1 kind: ServiceAccount metadata: name: calico-kube-controllers namespace: kube-system --- # Source: calico/templates/calico-etcd-secrets.yaml --- # Source: calico/templates/calico-typha.yaml --- # Source: calico/templates/configure-canal.yaml然后执行如下命令:

kubectl apply -f calico.yml #结果如下 [root@k8s-master01 data]# kubectl apply -f calico.yml configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.apps/calico-node created serviceaccount/calico-node created deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created再查看结点状态: 发现都已变成Ready

kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 Ready master 37m v1.17.5 k8s-node01 Ready <none> 20m v1.17.5 k8s-node02 Ready <none> 20m v1.17.5kubectl命令自动实例

echo "source <(kubectl completion bash)" >> ~/.bash_profile source ~/.bash_profile